In the courses we have taken, the policies on appropriate uses of artificial intelligence vary widely. Some professors allow use of AI in most cases (with a citation) short of writing a paper , some forbid it entirely.

Other professors strike a middle ground, distinguishing between acceptable and unacceptable uses of these tools. For instance, it might be permitted to use them to point out flaws in one’s argument or potential objections but impermissible to use them to formulate one’s original argument.

Even these seemingly benign uses, however, miss something essential about a humanities education: The point is learning how to write, to read, and to think, even imperfectly. In days past, suggestions to improve one’s writing came from intellectual conversations with peers, and students had to learn to critique their own writing without the aid of a 24/7 personal assistant.

If we permit the use of generative AI in humanities classes, then students are no longer encouraged to develop their capacity for intellectual conversation with others, nor do they practice how to think creatively without the heavy-handed guidance of artificial intelligence.

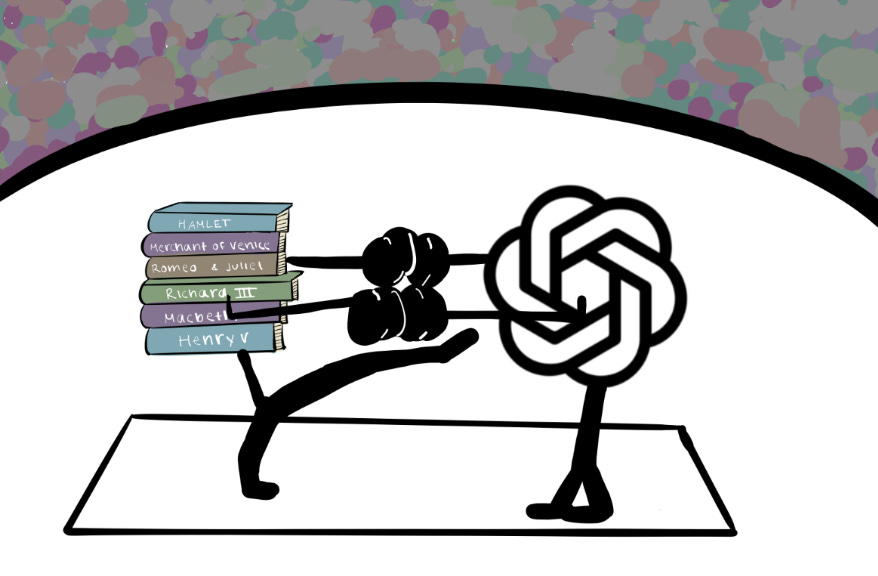

Moreover, the humanities loses its crucial human element (it is in the name, after all) with any use of generative AI. Central to the goals of philosophy, literature, or any other humanities field is to understand and communicate the human condition.

An AI chatbot lacks the subjective understanding of what it is to be human that motivates the humanities to begin with.

Read more | HARVARD CRIMSON