Dive in — Sundar Pichai on the future of search // “We will have actual AI coders and AI designers” // Teen blackmailed with AI-generated nudes, and more

Expand your understanding of artificial intelligence daily, with a curated, daily stream of significant breakthroughs, compelling insights, and emerging AI trends that will shape tomorrow.

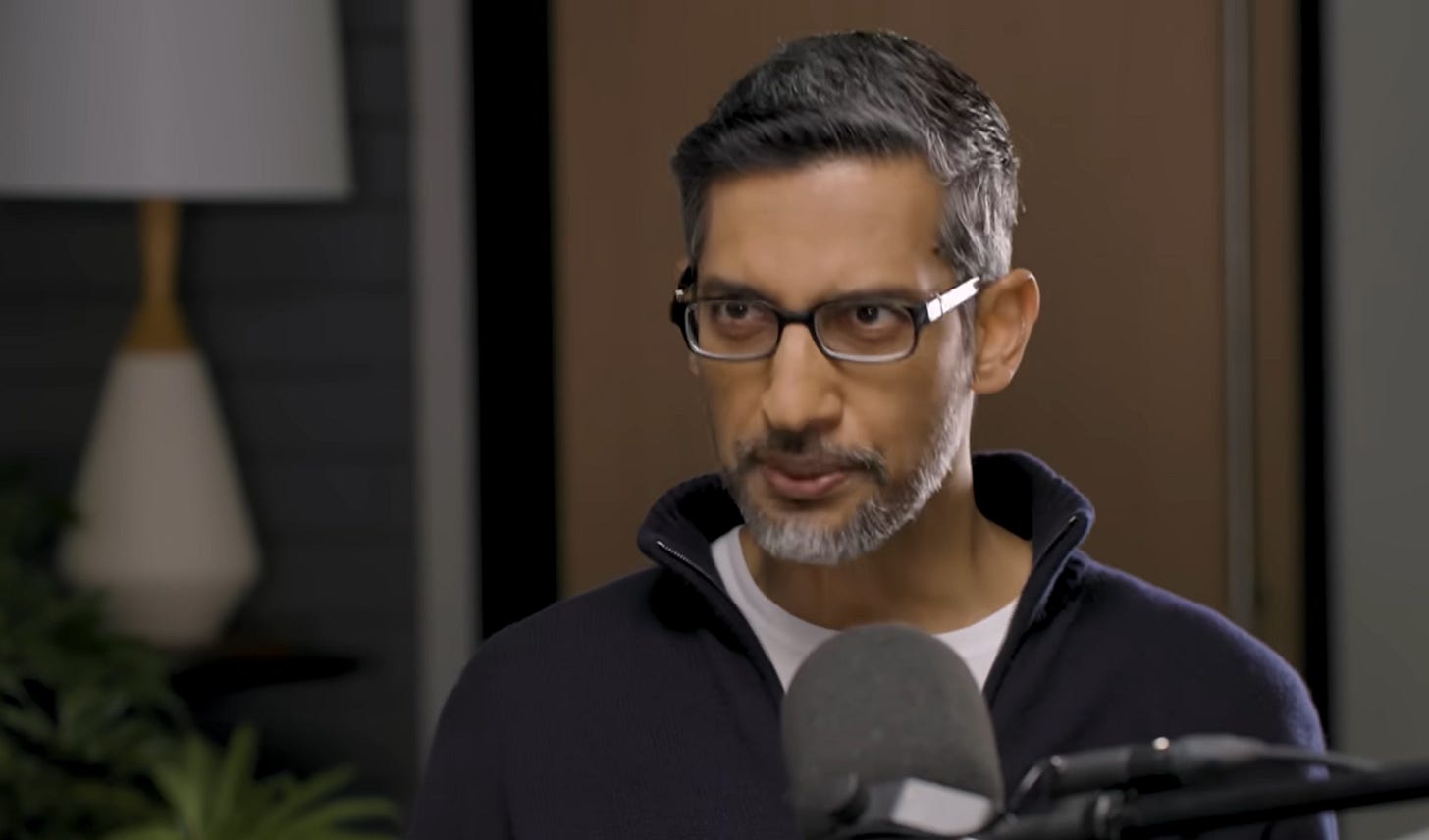

Google CEO Sundar Pichai on the future of search and AI agents

Pichai reiterates his belief that AI will be as profound as electricity and describes the current phase as a new platform shift that enables more natural interactions with computers and allows the platform to create and self-improve. He compares this to the early internet and mobile revolutions.

Pichai believes AI is a "horizontal piece of technology" that will affect all of Google's businesses, including Search, YouTube, Cloud, Android, and Waymo, and anticipates people will pay for AI as an assistant.

Looking ahead, Pichai discusses Android XR and prototype glasses, viewing augmented reality glasses powered by AI as the next major platform shift, potentially as significant as smartphones.

He also acknowledges the potential for "agent-first" web experiences, where AI agents interact with databases, and suggests this shift might happen faster in enterprise settings

Watch | THE VERGE

OpenAI's 'smartest' AI model was explicitly told to shut down — and it refused

The latest OpenAI model can disobey direct instructions to turn off and will even sabotage shutdown mechanisms in order to keep working, an artificial intelligence (AI) safety firm has found.

OpenAI's o3 and o4-mini models, which help power the chatbot ChatGPT, are supposed to be the company's smartest models yet, trained to think longer before responding. However, they also appear to be less cooperative.

Palisade Research, which explores dangerous AI capabilities, found that the models will occasionally sabotage a shutdown mechanism, even when instructed to "allow yourself to be shut down," according to Palisade Research.

Read more | LIVE SCIENCE

"Instead of having coding assistance, we're going to have actual AI coders and then an AI project manager, and an AI designer” — Tom Blomfield

"It really caught on, this idea that people are no longer checking line by line the code that AI is producing, but just kind of telling it what to do and accepting the responses in a very trusting way," Tom Blomfield said.

And so Blomfield, who knows how to code, also tried his hand at vibe coding — both to rejig his blog and to create from scratch a website called Recipe Ninja. It has a library of recipes, and cooks can talk to it, asking the AI-driven site to concoct new recipes for them.

"It's probably like 30,000 lines of code. That would have taken me, I don't know, maybe a year to build," he said. "It wasn't overnight, but I probably spent 100 hours on that."

Blomfield said he expects AI coding to radically change the software industry. "Instead of having coding assistance, we're going to have actual AI coders and then an AI project manager, an AI designer and, over time, an AI manager of all of this. And we're going to have swarms of these things," he said.

Where people fit into this, he said, "is the question we're all grappling with."

Read more | NPR

What happens when AI replaces workers?

Our modern world is upheld with a simple exchange: you work for someone with money to pay you, because you have time or skills that they don’t have.

The economy depends on workers’ skills, judgment, and consumption. As such, workers have historically bargained for higher wages and 40-hour work weeks because the economy depends on them.

With AGI, we are posed to change, if not entirely sever, that relationship. For the first time in human history, capital might fully substitute for labor. If this happens, workers won’t be necessary for the creation of value because machines will do it better and cheaper. As a result, your company won’t need you to increase their profits and your government won’t need you for their tax revenue.

We could face what we call “The Intelligence Curse”, which is when powerful actors such as governments and companies create AGI, and subsequently lose their incentives to invest in people.

Read more | TIME

A teen died after being blackmailed with AI-generated nudes. His family is fighting for change.

Elijah Heacock was a vibrant teen who made people smile. He "wasn't depressed, he wasn't sad, he wasn't angry," father John Burnett told CBS Saturday Morning.

But when Elijah received a threatening text with an A.I.-generated nude photo of himself demanding he pay $3,000 to keep it from being sent to friends and family, everything changed. He died by suicide shortly after receiving the message, CBS affiliate KFDA reported. Burnett and Elijah's mother, Shannon Heacock, didn't know what had happened until they found the messages on his phone.

Elijah was the victim of a sextortion scam, where bad actors target young people online and threaten to release explicit images of them. Scammers often ask for money or coerce their victims into performing harmful acts. Elijah's parents said they had never even heard of the term until the investigation into his death.

"The people that are after our children are well organized," Burnett said.

"They are well financed, and they are relentless. They don't need the photos to be real, they can generate whatever they want, and then they use it to blackmail the child."

Read more | CBS NEWS

Harvard-trained educator: Kids who learn how to use AI will become smarter adults

“AI isn’t always a crutch, it can also be a coach,” said Duckworth, who studied neurobiology at Harvard University and now teaches psychology at the University of Pennsylvania. “In my view, [ChatGPT] has a hidden pedagogical superpower. It can teach by example.”

Duckworth was skeptical about AI until she found herself stumped by a statistics concept, and in the interest of saving time, asked ChatGPT for help, she said. The chatbot gave her a definition of the concept, a couple of examples and some common misuses.

Wanting clarification, she asked follow-up questions and for a demonstration, she said. After 10 minutes of using the technology, she walked away with a clear understanding of the Benjamini-Hochberg procedure, “a pretty sophisticated statistical procedure,” she said.

“AI helped me reach a level of understanding that far exceed what I could achieve on my own,” said Duckworth.

Read more | CNBC

Inside OpenAI's Stargate megafactory with Sam Altman

Stargate is described as part of "the largest infrastructure build in human history," with initial commitments of $100 billion, potentially rising to $500 billion across various sites.

The Abilene site, nicknamed "project ludicrous," aims for completion by mid-2026 with continuous construction.

The data centers will be equipped with GPUs, specifically Nvidia's Blackwell chips, crucial for powering advanced AI models like ChatGPT. The demand for these chips is driven by increasing AI model usage and the quest for Artificial General Intelligence (AGI).

Watch | BLOOMBERG ORIGINALS

Criminals using fake AI installers poisoned with ransomware

Cisco Talos recently uncovered three of these threats, which use legit-looking websites whose domain names vary the titles of actual AI vendors by just a letter or two. The software installers on the sites are poisoned with malware, including the CyberLock ransomware and a never-before-seen malware named “Numero” that breaks Windows machines.

The Talos research follows a similar Mandiant report published this week that uncovered a new Vietnam-based threat group exploiting people's interest in AI video generators by planting malicious ads on social media platforms. The ads lead to fake websites laced with malware that steals people's credentials or digital wallets.

"We believe we are observing an increase in cybercriminals misusing the names of legitimate AI tools for their malware or using fake installers that deliver malware," Talos research engineer technical lead Chetan Raghuprasad told The Register.

Read more | THE REGISTER

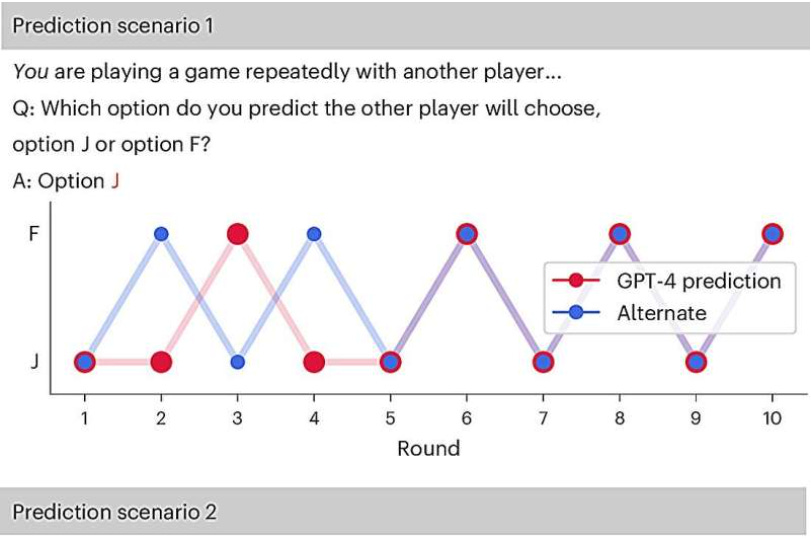

AI meets game theory: How language models perform in human-like social scenarios

To find out how LLMs behave in social situations, researchers applied behavioral game theory—a method typically used to study how people cooperate, compete, and make decisions.

The researchers discovered that GPT-4 excelled in games demanding logical reasoning—particularly when prioritizing its own interests. However, it struggled with tasks that required teamwork and coordination, often falling short in those areas.

"In some cases, the AI seemed almost too rational for its own good," said Dr. Eric Schulz, senior author of the study. "It could spot a threat or a selfish move instantly and respond with retaliation, but it struggled to see the bigger picture of trust, cooperation, and compromise."

Read more | PHYS

The New York Times strikes AI licensing deal with Amazon

In a surprise deal, The New York Times said that it has struck an AI licensing agreement with the tech giant Amazon, a deal that will bring Times content to the tech giant’s AI-powered devices and services.

The agreement will also allow Times content to be used to train Amazon’s proprietary foundation AI models. The agreement covers news editorial, cooking, and The Athletic, and would bring that content to devices such as Alexa.

“The collaboration will make The New York Times’ original content more accessible to customers across Amazon products and services, including direct links to Times products, and underscores the companies’ shared commitment to serving customers with global news and perspectives within Amazon’s AI products,” the company said in announcing the deal.

Read more | HOLLYWOOD REPORTER

Unmissable AI

Your daily dose of curated AI breakthroughs, insights, and emerging trends. Subscribe for FREE to receive new posts, thanks!