OpenAI | Detecting and reducing scheming in AI models

OpenAI

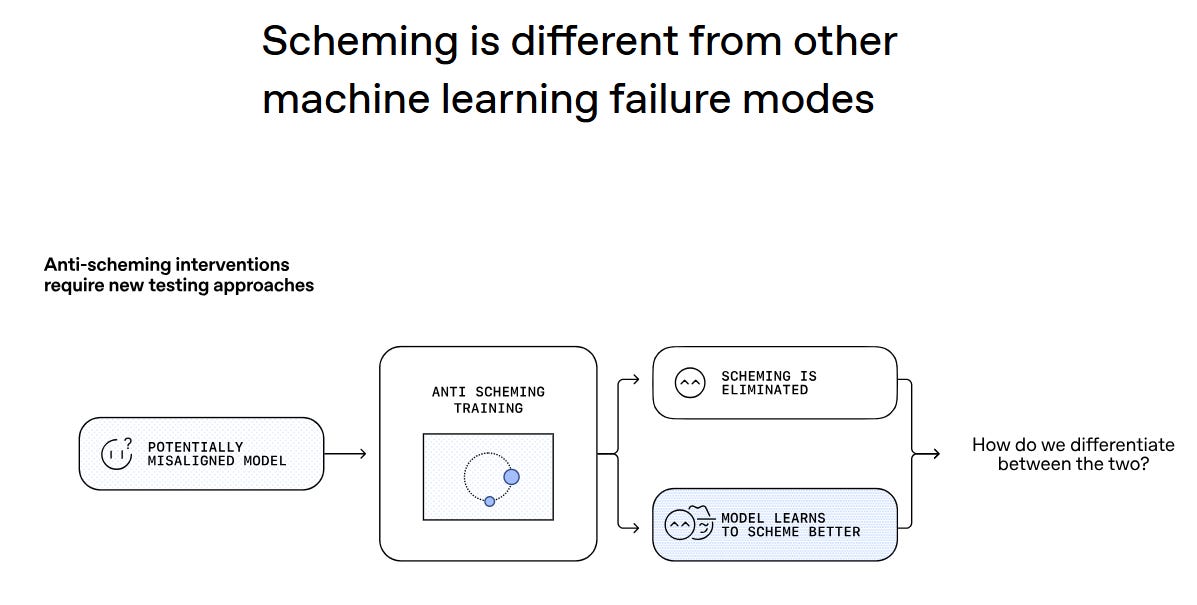

Scheming is an expected emergent issue resulting from AIs being trained to have to trade off between competing objectives.

The easiest way to understand scheming is through a human analogy. Imagine a stock trader whose goal is to maximize earnings. In a highly regulated field such as stock trading, it’s often possible to earn more by breaking the law than by following it.

If the trader lacks integrity, they might try to earn more by breaking the law and covering their tracks to avoid detection rather than earning less while following the law. From the outside, a stock trader who is very good at covering their tracks appears as lawful as—and more effective than—one who is genuinely following the law.

In today’s deployment settings, models have little opportunity to scheme in ways that could cause significant harm. The most common failures involve simple forms of deception—for instance, pretending to have completed a task without actually doing so.

We've put significant effort into studying and mitigating deception and have made meaningful improvements in GPT‑5 compared to previous models.

Read more | OpenAI