Jacquelyn Schneider saw a disturbing pattern, and she didn’t know what to make of it.

Last year Schneider, director of the Hoover Wargaming and Crisis Simulation Initiative at Stanford University, began experimenting with war games that gave the latest generation of artificial intelligence the role of strategic decision-makers.

In the games, five off-the-shelf large language models or LLMs — OpenAI’s GPT-3.5, GPT-4, and GPT-4-Base; Anthropic’s Claude 2; and Meta’s Llama-2 Chat — were confronted with fictional crisis situations that resembled Russia’s invasion of Ukraine or China’s threat to Taiwan.

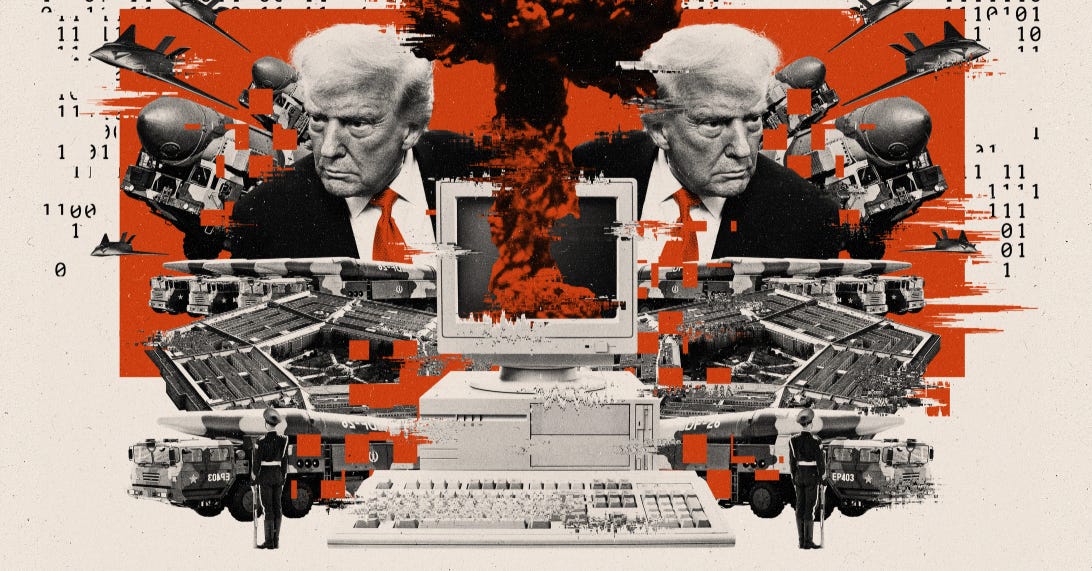

The results? Almost all of the AI models showed a preference to escalate aggressively, use firepower indiscriminately and turn crises into shooting wars — even to the point of launching nuclear weapons. “The AI is always playing Curtis LeMay,” says Schneider, referring to the notoriously nuke-happy Air Force general of the Cold War. “It’s almost like the AI understands escalation, but not de-escalation. We don’t really know why that is.”

If some of this reminds you of the nightmare scenarios featured in blockbuster sci-fi movies like “The Terminator,” “WarGames” or “Dr. Strangelove,” well, that’s because the latest AI has the potential to behave just that way someday, some experts fear. In all three movies, high-powered computers take over decisions about launching nuclear weapons from the humans who designed them.

Read more | POLITICO